I decided to move on from WordPress and start a new blog. You can read about my reasons here.

Follow me along and read my new post about Vim: How to Configure Syntastic.

I decided to move on from WordPress and start a new blog. You can read about my reasons here.

Follow me along and read my new post about Vim: How to Configure Syntastic.

Posted in development | Leave a Comment »

I’ve been using spacehi for years. It highlights invisible characters: trailing spaces and tab characters.

Here’s how it looks if you toggle it on and off:

In my dotfiles setup, it’s turned on by default.

In most cases, whitespace characters do not matter. But sometimes they do. For example, Go and Python are picky about spaces. Also, a mix of spaces and tabs will look different for different people and their editors — because nobody can agree how many spaces at tab character is (4 or 8?!). This is something I’ve talked about before.

This week, for the second time in a couple of weeks, I lost a bunch of time on another whitespace: character 160, the non-breaking space. To make a long story short, it was a copy and paste from Skype, and the JSON parser I was using choked on it.

It seems spacehi hasn’t been touched in (almost exactly) 10 years. I found its mirror on github. But it didn’t handle non-breaking spaces, so I forked it.

I’m willing to maintain it, it a very simple Vim plugin. Bug reports and improvements are welcomed.

Posted in development | Tagged vim | 1 Comment »

It’s always nice to get something for free. That’s how I feel about JSLint. Running your JavaScript code through JSLint gives you a few advantages:

There’s a whole bunch of stuff JSLint will pick up for you.

I have talked before about JSLint in the context of SpiderMonkey, but, nowadays, I install node.js for a few things. If I run JSLint through node.js, that means I won’t have to install SpiderMonkey anymore.

I admit, these pieces of software are moving fast and the instructions (or lack thereof) are limited. But these things will vary with your OS and skill level.

I’m going to focus on the Vim integration, but go ahead and install Node and install NPM.

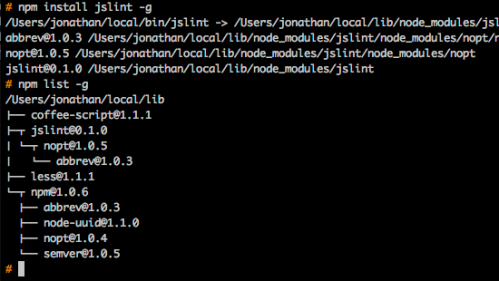

I recommend the simply named “jslint”. You can look it up on GitHub as node-jslint.

Make sure you don’t forget that “-g” flag with NPM. NPM changed a lot in version 1.0.

The end goal is:

You are in a JavaScript file, you press F4, Vim runs JSLint on your file, parses the errors and puts your cursors on the exact location of the first error with the others one waiting in the quickfix list.

The main part of integrating with Vim to “compile” something is to set makeprg and errorformat (aka efm). If you ever need to integrate with something else, be sure to Google for those.

Since we are going to invoke :make all the time, I’m going to bind it to F4. (put it in your .vimrc)

Step by step:

Next, create $HOME/.vim/ftplugin/javascript.vim. Put these lines into it:

The variable makeprg is just was it invoked when you do :make. The variable errorformat are instructions on how to parse the error messages of the “compiler”. That variable and how to configure it are a whole world of complexity.

Now, restart Vim and open some JavaScript file you have lying around. Press F4. Be ready for a lesson in humility.

If things don’t work out, try this:

Posted in development, javascript, vim | 12 Comments »

It all started with CoffeeScript. Like all languages I play with, one of my first step is to look for a Vim syntax file. Thankfully, the CoffeeScript page itself links to kchmck‘s vim-coffee-script on github. So far, so good.

Here’s the first step:

Wait … what?! I hate having to install software to … install software. At this point, I was ready to close the tab but it was a tpope project. That’s usually a sign of quality. I was ready to give this pathogen thing another look.

So … pathogen lets you dump “bundle” directories under ~/.vim/bundle/ and will setup the various Vim variables so that the plugin, ftplugin, syntax, ftdetect are all hooked up correctly. That’s nice; it solves a lot of the pain I’ve felt over the years about trying various vim plugins and messing with my setup.

In theory, you would do something like:

And add at the top of your .vimrc:

That’s pretty close to how I have it setup. While I was making my mind about pathogen, I found Tammer Saleh’s post about pathogen. Besides the details I outlined above, he suggests git cloning the repository and removing the .git instead of playing with git submodules. I could not agree more. Of course, I have to deal with that situation only because my ~/.vim is under git. (like all my dotfiles, read more)

I simplified his script for my own purposes: (on github)

refresh() {

local url="$1"

local dir="$2"

rm -rf $dir

git clone $url $dir –depth=1

rm -rf $dir/.git

if [ -f "$dir/.gitignore" ]; then

rm "$dir/.gitignore"

fi

}

refresh https://github.com/scrooloose/nerdcommenter.git nerdcommenter

refresh https://github.com/vim-scripts/matchit.zip.git matchit

refresh https://github.com/tpope/vim-haml.git vim-haml

refresh https://github.com/timcharper/textile.vim.git textile

refresh https://github.com/kchmck/vim-coffee-script.git vim-coffee-script

I think the --depth=1 on the git clone is a nice touch … especially since I delete the git directory right after the download. The CoffeeScript plugin is working well and it keeps being committed to. The refresh script is quite useful.

I’m planning on packaging a few of the plugins I wrote and “bundle” them too. (vim-slime)

Posted in development | 8 Comments »

2010 is over and, like last year, here’s what I read during the year:

nothing…

I read 42 books in 2010. That’s 8 books more than last year, or a 23% increase. I’m not sure how representative that is, however. It seems that, on average, I read about 3 books a month.

Out of those 42 books, 16 were audio books. (38%)

Posted in books | 2 Comments »

I was curious to know how many books, on average, I read a month. I don’t expose this information directly on bookpiles either. You could extract it from the RSS feed. It’s one the features I would like to add when I understand what information I want to present and how it is best presented.

In the meantime, I ran a query in the database and came up with this:

I felt 80% done. Then, I realized I didn’t quite know how I would extract, from the command-line, the sum, mean, standard deviation, minimum and maximum value. Of course, I could run it through R. Or Excel… The question wasn’t how to do statistics in general — it was how to do it as a filter … easily … right now.

A little research didn’t turn out any obvious answer. (please, correct me if I missed an obvious solution)

I wrote my own in awk. (awk is present on ALL the machines I use)

Here’s what the output looks like:

I included it in my dotfiles: the awk code and a bootstrap shell script (used above).

Posted in cli | Leave a Comment »

This project started out as a list of books in a text file.

When I think about a book, I think about its content, the people who talked about it and how it made me feel. Central to those thoughts is the visual representation of the book itself: its cover. A list in a text file was not the best way to think about books. Over time, I realized that it would be the kind of problem suited for a small web application.

I spent many hours working on this project. It used to be an excuse to play with Ruby on Rails. It used to be an excuse to play the limits of rich-client Javascript applications. It used to be an excuse to play with client and server-side optimizations, not by necessity but by a conscious effort to want to try things on a project I fully understand.

This is an application I designed for myself and that I use, for the lack of a better word, religiously. Hearing about books I want to read, buying a book, starting a new book, or finishing one, these are events that make me want to go to my profile and update it.

This application was initially meant to replace a text file. But the nature of a public display of books created new possibilities. When it comes to people I know, I want to know what they are reading so that we can talk about it the next time we meet.

“How was that book?”

Also, you can look at what people have read and discover what interests them. I have had a lot of interesting discussions after people browsed the books I have read.

Finally, this is also meant to be a portfolio piece. I can send people to the site to have a look at what I can do. The project is open-source and people can read the code and reach their own conclusions.

I’m open to comments and suggestions. Let me know what you think.

Code: http://github.com/jpalardy/bookpiles

Live app: http://bookpiles.ca

Posted in development, ruby, web | Leave a Comment »

A few years ago, when I was more into python, I stumbled on python challenge. It was great fun, I learned a bunch of stuff and it forced me to play with libraries I wasn’t familiar with.

In their own words: (about)

Python Challenge is a game in which each level can be solved by a bit of (Python) programming.

The Python Challenge was written by Nadav Samet.

All levels can be solved by straightforward and very short1 scripts.

Python Challenge welcomes programmers of all languages. You will be able to solve most riddles in any programming language, but some of them will require Python.

Sometimes you’ll need extra modules. All can be downloaded for free from the internet.

It is just for fun – nothing waits for you at the end.

Keep the scripts you write – they might become useful.

People who know me know that I read a lot. I am, therefore, painfully aware that books are not the best way to learn things.

What is the best way to learn something?

To be honest … I don’t know. Not books, not screencasts… There are better ways: a one-on-one session, pair programming. On the side of DOING there is always: DOING more. Read more code, program more, release more.

In the spirit of the python challenge, I released today Command-line One-liner Challenges.

The idea is not exactly the same: I do provide solutions and you are allowed to move on based on your interests (or frustration).

I strive to make each challenge look and feel like any other challenge. The directory structure will be something like this:

Each challenge is its own directory (numbered). Inside it, you can find a very short instructions.txt file. There are 2 subdirectories: problem and solution. Those subdirectories should be the same except for the content of the compare.sh file.

Look at the input.txt file. Then, look at the expected.txt file. Imagine how, as a one-liner, you could transform input.txt into expected.txt.

You are supposed to run compare.sh. Just open it and fill in the blanks, so to speak.

convert() {

cat "$@"

}

convert input.txt > actual.txt

${DIFF:-diff -q} actual.txt expected.txt

The project’s README contains more information.

This is meant to be fun. Clone the repository and give it a go. I’m going to push more challenges over time, you might want to watch the repo.

If you have a better solution than what I provide, please send it to me, I’ll find a way to include it in the project.

Also, if you have an idea for a challenge, let me know.

Posted in development | 3 Comments »

Just after I was done writing Managing PATH and MANPATH, I stumbled on “man man” and put to rest the mysteries of MANPATH.

If MANPATH is defined, it will be used to lookup man pages.

If MANPATH is NOT defined, the manpath config file is going to be used. Depending on the OS your are using, it might be something like /etc/man.conf (Mac OS X) or /etc/manpath.config (Ubuntu).

Here’s an excerpt for “man man” (Mac OS X):

It says there’s a command line flag to override MANPATH (-M), but I don’t think that’s excessively useful.

Here’s something useful: when you aren’t sure what man page you’re going to get, try the -w flag.

Posted in development | Leave a Comment »

My PATH variable used to be a mess. I have used UNIX-like systems for 10 years and have carried around my configuration files in one form or another since then.

Think about Solaris, think about /opt (or /sw), and change the order based on different requirements.

I have seen a lot of people devise clever if-then-else logic with OS-detection. I have seen yet other, myself included, who tried to aim for the most comprehensive and all-inclusive PATH.

In the end, all that matters is that when you type a command, it IS in your path

As for MANPATH, the situation was even worse. I used to depend (and hope) that the OS-inherited MANPATH contained everything I needed. For a long time, I didn’t bother to set it right and just googled for the man pages if/when I was in need.

Invoking man for something I just installed often meant getting no help at all.

When it comes to bin and share/man directories, there are a handful of predictable places to look for. For PATH:

Notice the bin and sbin combinations. And for MANPATH:

It should be clear that there is a lot of duplication there. Also, if you change the order of your PATH, you should probably change the order of your MANPATH so that the command you get the man page for is the command invoked by your shell. The GNU man pages are not very useful when you are using the BSD commands, on Darwin, for example.

Here’s the plan:

What you get:

Here’s something you can put in your .bashrc

# unshift_path(path)

unshift_path() {

if [ -d $1/sbin ]; then

export PATH=$(prepend_colon "$1/sbin" $PATH)

fi

if [ -d $1/bin ]; then

export PATH=$(prepend_colon "$1/bin" $PATH)

fi

if [ -d $1/share/man ]; then

export MANPATH=$(prepend_colon "$1/share/man" $MANPATH)

fi

}

# TABULA RASA

export PATH=""

export MANPATH=""

unshift_path "/usr/X11"

unshift_path ""

unshift_path "/usr"

unshift_path "/usr/local"

unshift_path "/opt/local"

unshift_path "$HOME/local"

unshift_path "$HOME/etc"

export PATH=$(prepend_colon ".local" $PATH)

I use $HOME/local to store machine-specific binaries/scripts. For example, that’s where I install homebrew on Mac OS X. That’s also where I would put cron scripts or other “I just use this script on this machine” type of things.

I use $HOME/etc to store binaries I carry around with my configuration files. That’s where I clone my dotfiles project.

Finally, the relative path .local is an interesting hack. It allows for directory-specific binaries. This solves the “I just use this script when I’m in that directory” problem. This trick is discussed in this blog post.

Posted in cli | 3 Comments »